Convolutional autoencoders and LSTMs: Using deep learning to overcome Kolmogorov-width limitations and accurately model errors in nonlinear model reduction

Abstract

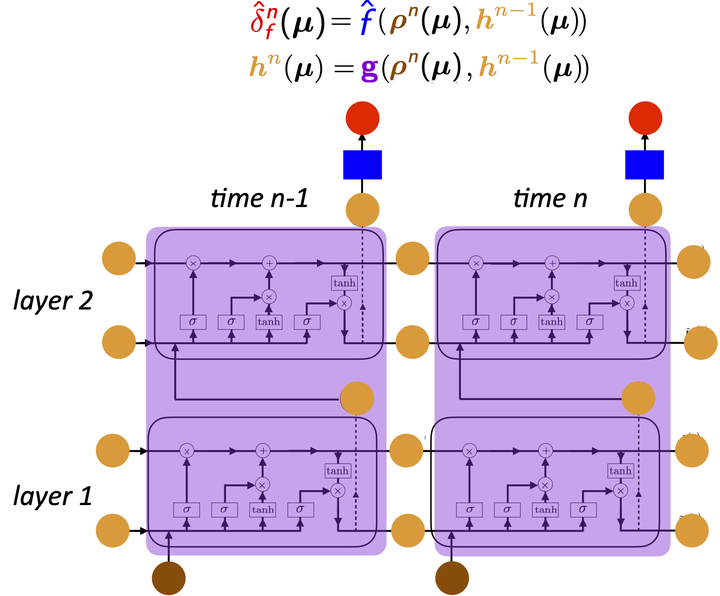

The explosion of artificial intelligence—especially techniques arising from deep neural networks—has yielded exciting advances in fields such as computer vision, natural language processing, and reinforcement learning. However, the application of these methods to problems in engineering and science remains limited. In this talk, we describe how two particular recent advances in deep learning, namely convolutional autoencoders and long-short-term-memory (LSTM) recurrent neural networks (RNNs) can be employed to overcome two longstanding challenges in nonlinear model reduction: the Komolgorov-width limitation of linear subspaces, and accurate error quantification.

Date

Feb 21, 2020 —

Location

Providence, Rhode Island