Kevin T. Carlberg

Affiliate Associate Professor of Applied Mathematics and Mechanical Engineering

University of Washington

Biography

Kevin Carlberg is currently on sabbatical. Previously, he was Director of AI Research Science at Meta (5+ years), Distinguished Member of Technical Staff at Sandia National Laboratories (8+ years), and received his PhD from Stanford. He currently holds an Affiliate Associate Professorship of Applied Mathematics and Mechanical Engineering at the University of Washington . He specializes in leading multidisciplinary teams into new technology areas that require fundamental contributions in physical AI and computational science.

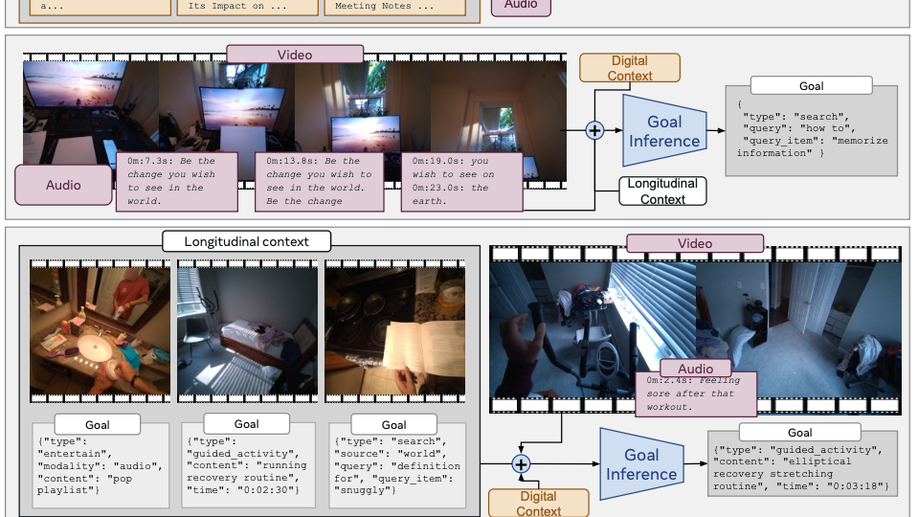

At Meta, Kevin initiated, grew, and led a multidisciplinary (AI, HCI, SWE, PM, Design, UX), cross-org (Reality Labs Research and FAIR) research team focused on building novel AI and simulation technologies for Meta’s wearable computers and VR/MR devices. His technical leadership spanned the domains of physical AI and computational science.

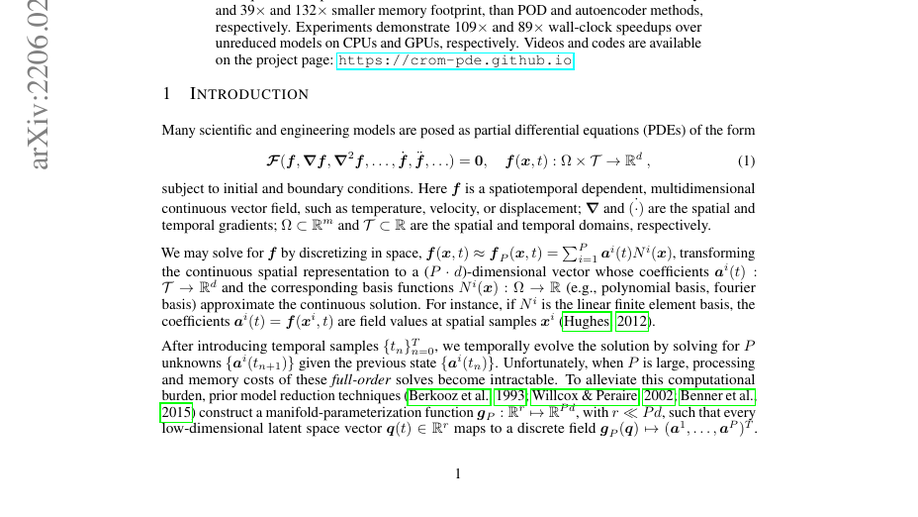

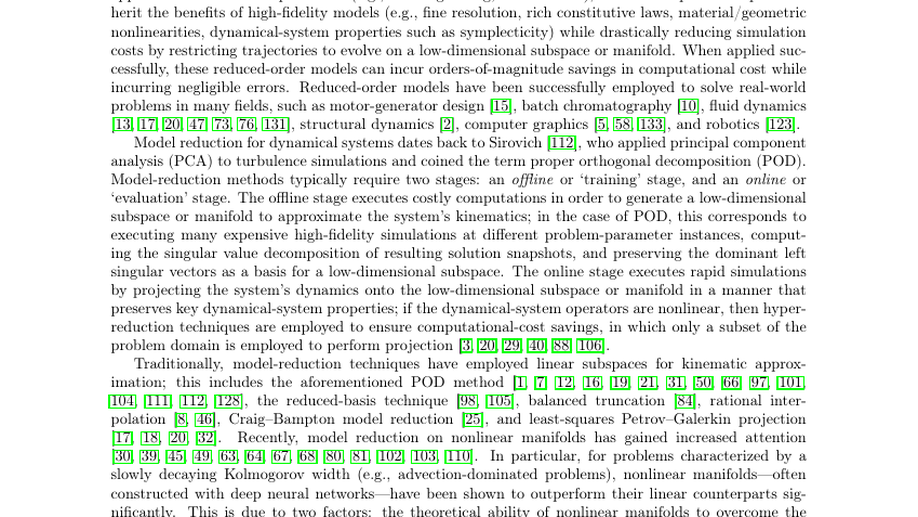

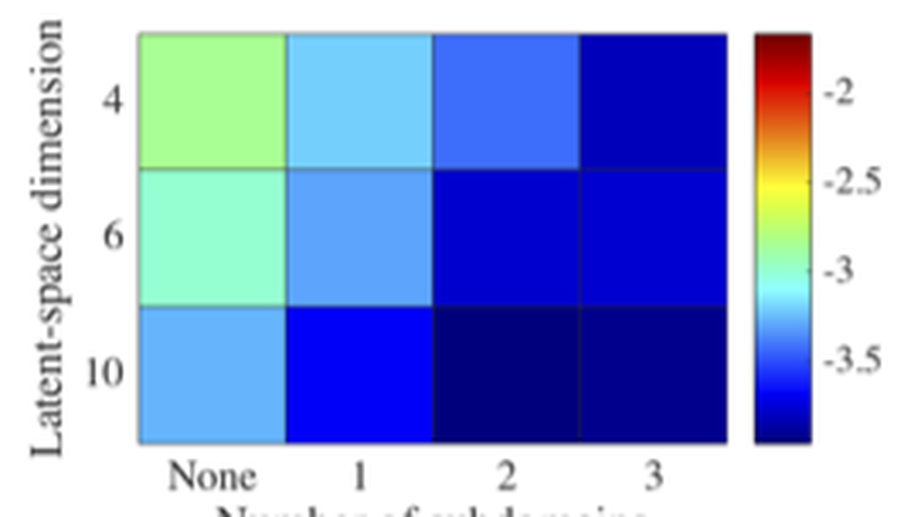

At Sandia National Laboratories, Kevin initiated, grew, and led a research team in developing new computational methodologies to enable extreme-scale physics simulations to execute in near real time for high-consequence national-security applications. His technical leadership spanned the domains of AI-driven model reduction and large-scale uncertainty quantification. His plenary talk at the ICERM Workshop on Scientific Machine Learning summarizes this work.

Interests

- Physical AI

- Computational Physics

- Computational Mathematics

Education

PhD in Aeronautics and Astronautics, 2011

Stanford University

MS in Aeronautics and Astronautics, 2006

Stanford University

BS in Mechanical Engineering, 2005

Washington University in St. Louis